Web Scraping

Tracking of information published on websites by 800 law firms in the US.

Customer Requirement

To keep track of information published on websites by 800 law firms in the US.

Challenge

- Keeping a tab of the ever-changing information on the internet

- Ensuring the updates reflect on the customer database within 24 hours of the change

- Each website has a different structure of showing information

- Some websites had inconsistent structure of showing the same type of information on 2 different pages

- Some websites used advanced technologies to showcase information. Technologies from which information may not be easily extractable

Solution

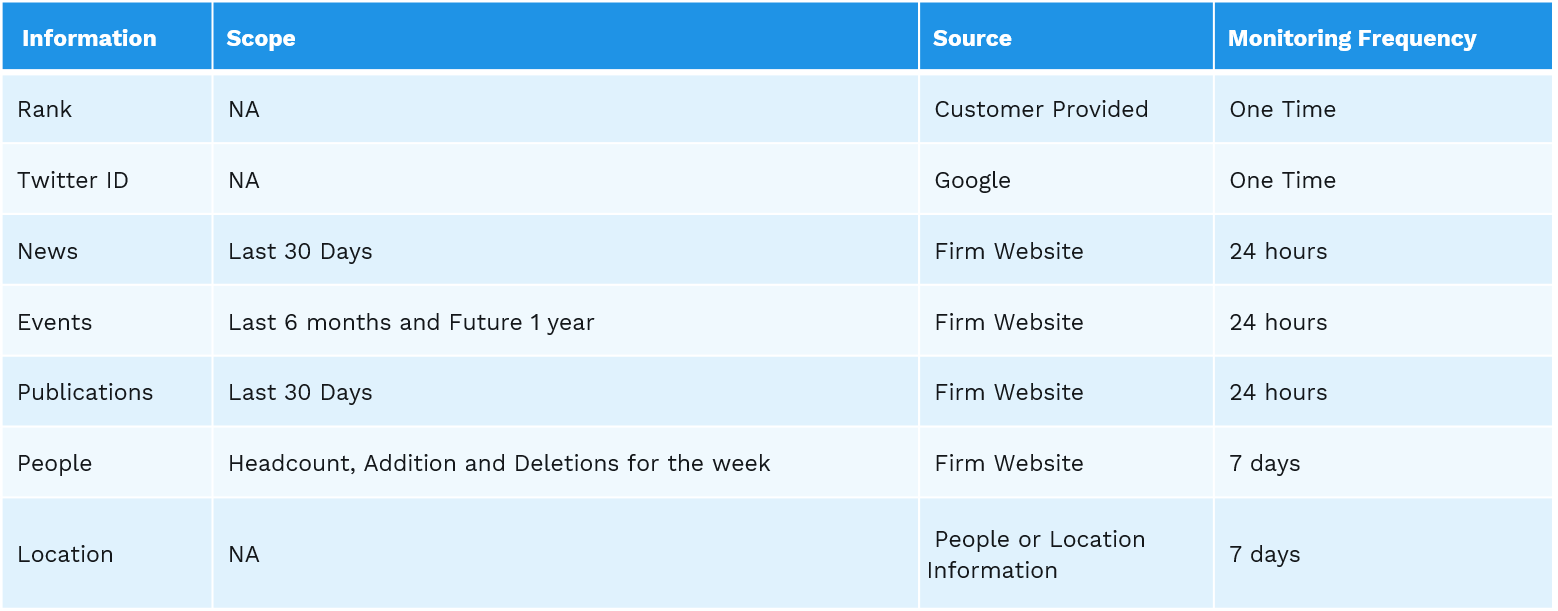

The customer was interested in the following information from each of the website

The rank was customer provided and Google was used to check on the twitter handle for the law firm. All other information was to be checked for and updated on the customer’s database ensuring accuracy.

Post feasibility analysis on all websites, Exela categorized the websites.

Structured websites which constitute 85% of the entire volume were the websites which had developed a shell for showing information. Exela used a tool which allowed us to setup a parser which would be setup on the pages of interest. The HTML tags in which the information was present were analyzed and pushed into the parser. The parser ran every night and extracted the information from the website and pushed it into the customer’s database. Scripts were deployed to report inconsistent information or junk in the table and necessary actions were taken to fix issues.

Unstructured & Advanced websites which constitute 15% of the volume were websites which did not have consistent ways of showing the same information. Information of interest on those websites was extracted using a combination of external and internal tools which required very limited human intervention.